What are Kubernetes Secrets?

Introduction to K8s Secrets

The concept of Secrets refers to any type of confidential credential that requires privileged access with which to interact. These objects often act as keys or methods of authentication with protected computing resources in secure applications, tools, or computing environments. In this article, we are going to discuss how Kubernetes handles secrets and what makes a Kubernetes secret unique.

Why are Secrets Important?

In a distributed computing environment it is important that containerized applications remain ephemeral and do not share their resources with other pods. This is especially true in relation to PKI and other confidential resources that pods need to access external resources. For this reason, applications need a way to query their authentication methods externally without being held in the application itself.

Kubernetes offers a solution to this that follows the path of least privilege. Kubernetes Secrets act as separate objects which can be queried by the application Pod to provide credentials to the application for access to external resources. Secrets can only be accessed by Pods if they are explicitly part of a mounted volume or at the time when the Kubelet is pulling the image to be used for the Pod.

How Does Kubernetes Leverage Secrets?

The Kubernetes API provides various built-in secret types for a variety of use cases found in the wild. When you create a secret, you can declare its type by leveraging the `type` field of the Secret resource, or an equivalent `kubectl` command line flag. The Secret type is used for programmatic interaction with the Secret data.

Ways to create K8s Secrets

There are multiple ways to create a Kubernetes Secret.

Creating a Secret via kubectl

To create a secret via kubectl, you’re going to want to first create text file to store the contents of your secret, in this case a username.txt and password.txt:

echo -n 'admin' > ./username.txt

echo -n '1f2d1e2e67df' > ./password.txt

Then you’ll want to leverage the kubectl create secret to package these files into a Secret, with the final command looking like this:

kubectl create secret generic db-user-pass \

--from-file=./username.txt \

--from-file=./password.txt

The output should look like this:

secret/db-user-pass created

Creating a Secret from config file

You can also store your secure data in a JSON or YAML file and create a Secret object from that. The Secret resource contains two distinct maps:

data: used to store arbitrary data, encoded using base64stringData: allows you to provide Secret data as unencoded strings

The keys of data and stringData must consist of alphanumeric characters, -, _ or ..

For example, to store two strings in a Secret using the data field, you can convert the strings to base64 as follows:

echo -n 'admin' | base64

The output should look like this:

YWRtaW4=

And for the next one:

echo -n '1f2d1e2e67df' | base64

The output should like similar to:

MWYyZDFlMmU2N2Rm

You can then write a secret config that looks like this:

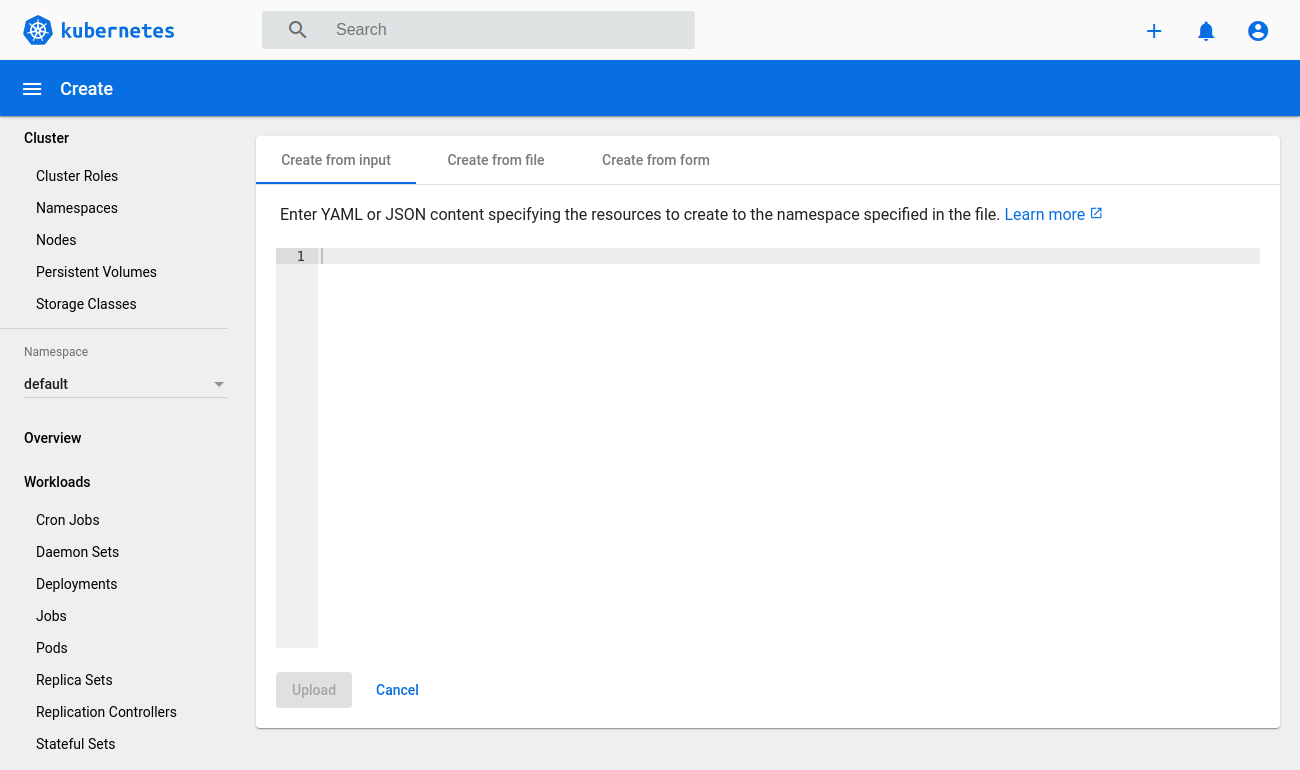

apiVersion: v1

kind: Secret

metadata:

name: mysecret

type: Opaque

data:

username: YWRtaW4=

password: MWYyZDFlMmU2N2Rm

Creating a secret using kustomize

You can also generate a Secret object by defining a secretGenerator in a kustomization.yaml file that references other existing files. For example, the following kustomization file references the ./username.txt and the ./password.txt files, for example:

secretGenerator:

- name: db-user-pass

files:

- username.txt

- password.txt

Then apply the directory containing the kustomization.yaml to create the Secret:

kubectl apply -k .

The output should look similar to this:

secret/db-user-pass-96mffmfh4k created

Editing a Secret

You can edit an existing Secret with the following command:

kubectl edit secrets mysecret

This command opens the default configured editor and allows for updating the base64 encoded Secret values in the data field:

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

data:

username: YWRtaW4=

password: MWYyZDFlMmU2N2Rm

kind: Secret

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: { ... }

creationTimestamp: 2016-01-22T18:41:56Z

name: mysecret

namespace: default

resourceVersion: "164619"

uid: cfee02d6-c137-11e5-8d73-42010af00002

type: Opaque

How to Use Secrets

Secrets can be used in a variety of ways, such as being mounted as data volumes or exposed as environment variables to be used by a container in a Pod. Secrets can also be used by other parts of the system, without being directly exposed to the Pod. For example, Secrets can hold credentials that other parts of the system should use to interact with external systems on your behalf.

Using Secrets as environment variables

To use a secret in an environment variable in a Pod, you’ll want to:

- Create a secret or use an existing one. Multiple Pods can reference the same secret.

- Modify your Pod definition in each container that you wish to consume the value of a secret key to add an environment variable for each secret key you wish to consume. The environment variable that consumes the secret key should populate the secret’s name and key in

env[].valueFrom.secretKeyRef.

- Modify your image and/or command line so that the program looks for values in the specified environment variables.

This is an example of a Pod that uses secrets from environment variables:

apiVersion: v1

kind: Pod

metadata:

name: secret-env-pod

spec:

containers:

- name: mycontainer

image: redis

env:

- name: SECRET_USERNAME

valueFrom:

secretKeyRef:

name: mysecret

key: username

- name: SECRET_PASSWORD

valueFrom:

secretKeyRef:

name: mysecret

key: password

restartPolicy: Never

Using Immutable Secrets

Kubernetes provides an option to set individual Secrets as immutable. For clusters that extensively use Secrets (at least tens of thousands of unique Secret to Pod mounts), preventing changes to their data has the following advantages:

- protects you from accidental (or unwanted) updates that could cause applications outages

- improves performance of your cluster by significantly reducing load on kube-apiserver, by closing watches for secrets marked as immutable.

This feature is controlled by the ImmutableEphemeralVolumes feature gate, which is enabled by default since v1.19. You can create an immutable Secret by setting the immutable field to true. For example:

apiVersion: v1

kind: Secret

metadata:

...

data:

...

immutable: true

Built-in Secret Types

- Opaque Secrets – The default Secret type if omitted from a Secret configuration file.

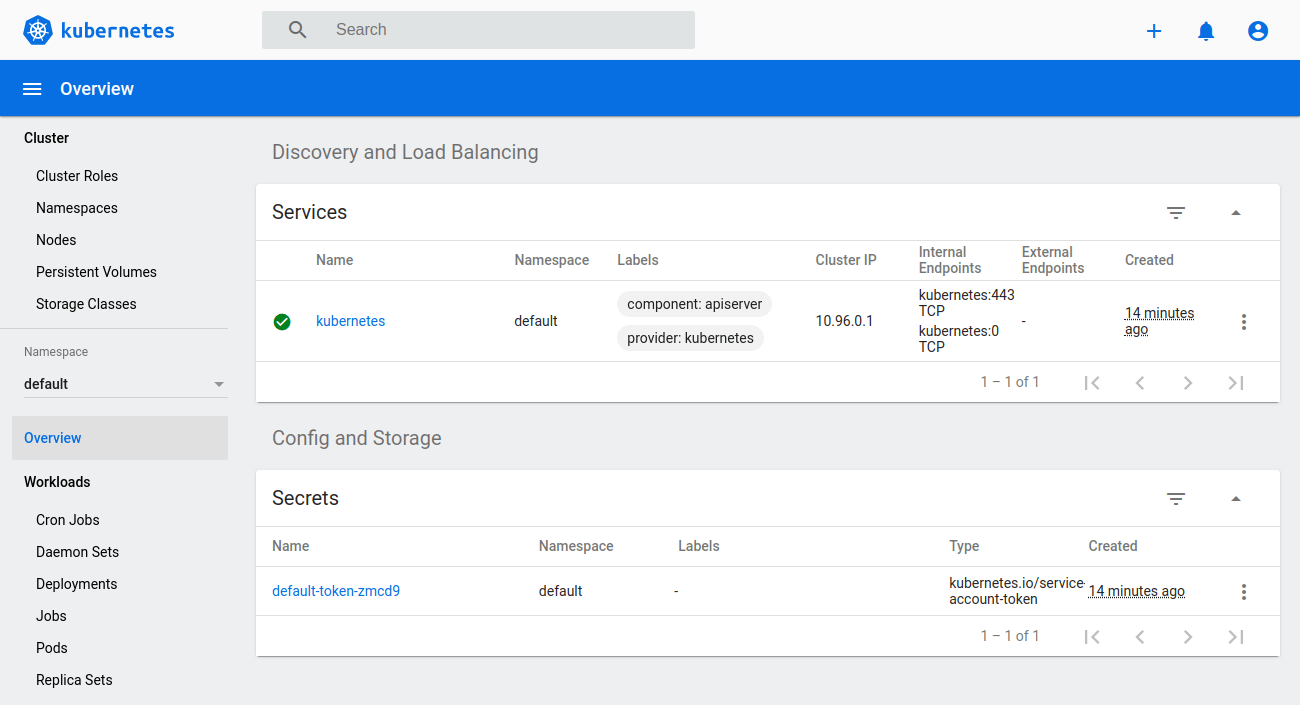

- Service account token Secrets – Used to store a token that identifies a service account. When using this Secret type, you need to ensure that the `kubernetes.io/service-account.name` annotation is set to an existing service account name.

- Docker config Secrets – Stores the credentials for accessing a Docker registry for images.

- Basic authentication Secret – Used for storing credentials needed for basic authentication. When using this Secret type, the `data` field of the Secret must contain the `username` and `password` keys

- SSH authentication secrets – Used for storing data used in SSH authentication. When using this Secret type, you will have to specify a `ssh-privatekey` key-value pair in the `data` (or `stringData`) field as the SSH credential to use.

- TLS secrets – For storing a certificate and its associated key that are typically used for TLS . This data is primarily used with TLS termination of the Ingress resource, but may be used with other resources or directly by a workload. When using this type of Secret, the `tls.key` and the `tls.crt` key must be provided in the data (or `stringData`) field of the Secret configuration

- Bootstrap token Secrets – Used for tokens used during the node bootstrap process. It stores tokens used to sign well known ConfigMaps.

Conclusion

So now that you’ve had a brief introduction to what a secret is, you’re ready to learn how to use them in practice. Stay tuned to our blog where we’re going to be learning how to apply the concepts we learned here in a practical environment.